In my past assignment I learned the concepts behind neural networks and then implemented my own. In this project I will again be implementing neural networks; however, instead of implementing my own code, I will be learning to work with neural network libraries. Here is a screen cast I created going over my work in this project.

Deep Learning Libraries

When actually working in deep learning, you generally do not write all of your own code, instead you utilize deep learning libraries. What a library does is best explained by looking at an equation for a neural net:

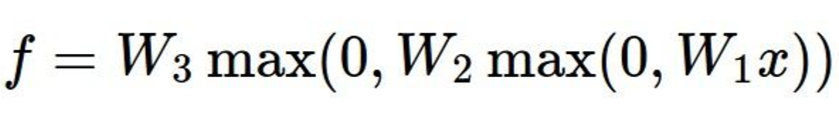

With f representing some function and x representing some input. What is changing here is f, and that is why you use a deep learning library for. Applying different functions to your data will yield different results and, if done correctly, better results. A deep learning library stores a whole bunch of functions that can be easily accessed. Further, libraries also make it really easy to mix and match these functions on top of each other and form multiple layer neural networks:

What this allows you to do is "design" your neural networks, an idea I have not yet learned how to do but will definitely get to over the summer. For me this assignment was just about learning how to use a library. It is easier said than done, as figuring out how to install and then use the libraries can be tedious. My first goal was to run a neural network on the MNIST dataset using the deep learning library torch. In about 6 hours I was able to accomplish this using this tutorial. In which I train a neural network on the MNIST dataset. The results after training were an error of 0.0008%. Better than a human!

I also spent a few hours attempting to get another deep learning library called Caffe up and running with the MNIST dataset. However, installation has proved tricky so I will finish it in the future.

Deep learning on Lord of the Rings!

After this I found a really cool project that Andrej Karpathy , the graduate student who taught CS231n, created using torch that trained a neural network on a large body of text and then attempted to write. He trained a neural network on Shakespeare and got some awesome results so I figured I would give it a try using the Lord of the Rings trilogy. After 1000 iterations it outputted:

the itt no pounters here the casmose on the fillower.He mare and hail spide and quietiiss, and the smirnol. But not in sipar Back! the ever along what we ho angs headd heating and lave t he dromned.

Thearine in had a vadeereled word Sarcore of wave heTouch Ho bout! Lethlost clomend over in hizl whe for fagh to lake it for moby, And

2000 iterations:

fert; and they ithersed: whiits heme pacching sow. In the ring Picpl of the Lount heed plang wiet agetury turn-reveslous. I am jope over the younce by wasgancly the drey nepurst had ppinings. Then wery south a

drift. I the stommband

light then land, shat it swall, sowenthe

frodingtell, from there mark be mounto amight truge light things. But it trick

3000 iterations:

sh. He stooded to him. He could folk on the quiet him, and their comfant to the mole of the fither-leaves of Bag Forn led the ploce still made, howt

of the Inlady that with had feir yards of the party and far ever or smill, and

in the days were looked at out of hissoftle landering

evil and other hobbits shust and other and far knowir that in the tumber of the Forest the

hobbits for the

4000 iterations:

It shilld he did not get out of the mists, and the blue, side of Pippin when the griving over the stone if he turned between fair.

Not destentes

in the Wise moved it. The

bidden was said, and filled on foot breath-forts.

The hobbits

would make through the precetury,

Mo, though

Sprence

shoulders could hust be time be shot that have been any south and had in

5000 iterations:

sh. It should not find him them all the path, like silence, and

neighed in the honest in!20

the fellowship of

the ring

looking more to be no more. Nor change or markwours again, hu! Or if you think I have found to me. This valley reachwate mean through the road

The visible improvement the neural networks made was really cool! The difference between the 1000th iteration and the 5000th iteration is astounding. By 5000 iterations the network has learned to form English words well, and it is beginning to decipher the nuance of grammar within the English language.

Conclusion

I am really happy with the results of this project as I believe it served as a great introduction into learning to work with a deep learning library. It has also illuminated some possible future work for me to do like learning how to design a neural network and looking into other deep learning libraries.